Much of the research regarding responsible AI focuses on algorithmic biases. Bias as defined in this scope references the inequitable, unjust, or inaccessibility to societal resources or privileges. Bias and discernment are a natural part of any healthy ecosystem. They help communities create boundaries that support their virtues and goals. Biases that create discrimination practices within a community or society are most often derived from biases that create disparate treatment or disparate impacts inequitably to marginalized groups [15].

Discrimination is a form of enacted violence upon a specific group. Our use of the term “violence” is an important distinction that drives home the gravity of how, through a humanistic lens, discriminatory actions can impact the trajectory, safety, health, wellbeing, and overall lives of those who experience it. Discrimination rarely presents itself in a vacuum and many discriminatory experiences reinforce structural and emotional burdens that are already impacting these groups.

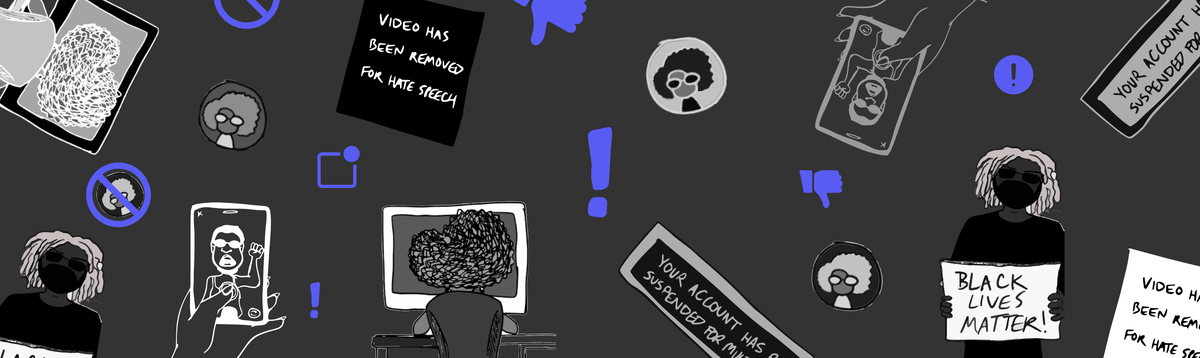

This paper will focus on how these AI and automated biases create microaggressions [16], specifically towards Black women. In order to illustrate these microaggressions, we will take a path from system bias through algorithmic discrimination, to an example of a microaggression based on the shared experiences of Black women.

Black Womanhood within the Tech Ecosystem

Black Womanhood within the Tech Ecosystem

From Bias to Discrimination

From Bias to Discrimination

Systemic Enactments of Algorithmic Discrimination

Systemic Enactments of Algorithmic Discrimination

Structural Enactments of AIgorimithic Discrimination

Structural Enactments of AIgorimithic Discrimination

Toward a Solution

Toward a Solution

Bibliography

Bibliography