Without the accountability and transparency of companies that create systems for such high-risk industries, there will continue to be racial bias towards Black women Companies need to implement stages of immense testing before launching AI systems that only enforce the implicit biases of those they hire. To truly be efficient, effective, and distanced from corruption, these companies will need to go back and edit the prejudice from the algorithmic foundation of their systems, and no longer deploy systems quickly and without absolute confidence that racial and gendered biases have been dismissed. We present a few empirical perspectives on addressing algorithmic microaggressions for Black women through the design, development, and integration of AI-based technologies in our society.

- Attesting Bias - Fairness through awareness.

Clearly state how biases are enacted at every level of development of current AI-based products. Within systems already in place, provide affordances for feedback that will allow users to report discriminatory features—partnering with organizations like Trust Black Women that amplify the voices of Black women in response to injustice.

- Diversified experiences of Humans in the Loop

When we think of humans in the loop, we often think of the design and development processes that create technology-driven products that go to market. It is often difficult to get a breadth of experiences in this process to design and develop truly unbiased AI. This is why we reinforce the idea that even in systems that have been implemented, creating systemic affordances that mitigate tokenism and allow marginalized subcultures to provide adequate and timely feedback, can help mitigate the impacts of AI enacted injustices.

- Seek solutions through non-dominant frameworks.

Many subcultures and indigenous communities have knowledge and practices based on alternatives to Western systems of domination. Leveraging these constructs may create new algorithms or development frameworks for AI development or implementation. The goal may be to create a new paradigm for algorithmic development that includes a genuinely interdisciplinary perspective, that allows for input from social sciences, computational sciences, humanities, and cultural experts. Additionally, decouple the development of AI systems from corporate profit.

- Mitigate the poor tax.

The poor tax references the indirect consequences for the poor or disenfranchised, creating higher prices or financial burdens for lower quality goods or services.

- There is no easy fix.

As long as AI systems are being trained on an imperfect society, there will always be a need to take a critical lens to how these systems impact or reinforce unfair societal disparity.

- Give users agency.

Users should have the option to opt-out or choose from alternative or ‘human-based’ recommender systems.

Black Womanhood within the Tech Ecosystem

Black Womanhood within the Tech Ecosystem

From Bias to Discrimination

From Bias to Discrimination

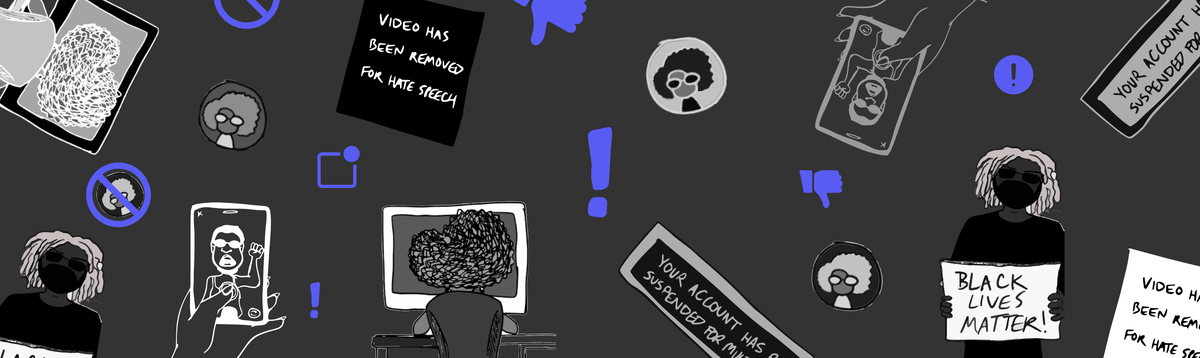

Systemic Enactments of Algorithmic Discrimination

Systemic Enactments of Algorithmic Discrimination

Structural Enactments of AIgorimithic Discrimination

Structural Enactments of AIgorimithic Discrimination

Toward a Solution

Toward a Solution

Bibliography

Bibliography