With about 69% of leading coders and developers in the US alone being white, cis-gendered men ranging in the ages of 35 - 50 for systems that people of all backgrounds, ethnicities, religions, and perspectives use, a point of focus is the lack of bias within the work they carry out. We see Black women as among one of the most vulnerable groups with concern to algorithmic biases. We can see this through America’s drive towards face recognition for law enforcement, the most racist legal system we have today.

The data needed for this development alerts numerous concerns with privacy, unfair treatment, and the uncontrollable aftermath of systematic racism and stereotyping. The least amount of accuracy when observed by face recognition technologies are darker complexioned women between the ages of 18-30. And with companies like Amazon marketing their work to law enforcement with the immense discrepancies at its current state, innocent Black women will be misidentified and incarcerated at higher rates. “These algorithms consistently demonstrate the poorest accuracy for darker [women, emphasizing the lack bias in the individuals creating the foundation encodings]” [10].

In the AI development lifecycle there are several moments where biases can be perpetuated.

#1. Construct Bias: When a measure is not equivalent across cultures

Example of the Bias: Systems designed to flag inflammatory language may be based on standard English phrasing or language meanings.

Example of the Discrimination: Among African-American communities, many colloquialisms are derived from lifestyle appropriations where words and phrases may often take on additional meanings. When using colloquial variations of language in technology-mediated social spaces, there may be inapplicable flags or censorship of innocuous posts.

Example of the Microaggression:

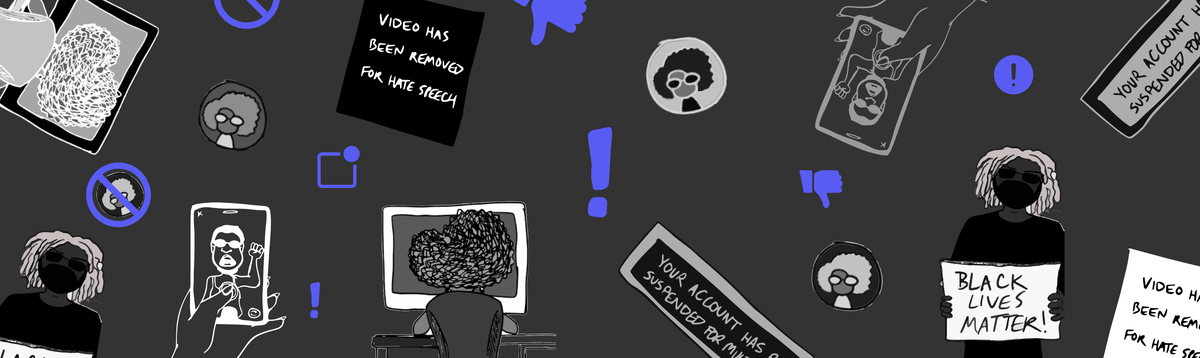

Censorship, respectability policing, and access to affinity spaces for Black women have a history of challenges. Navigating a society where access to social media may impact your work, familial connections, or access to emotional support structures means that censorship could have detrimental impacts on your lifestyle. [Image by Mia S. Shaw Artist/Maker Educator/PhD candidate.]

In a world where Black bodies and Black culture rides the line between acceptable and deplorable, Black women extend a tremendous amount of emotional labor, self-regulating how they show up in society. In automation-regulated environments, Black women may be led to feel like there are sacred or safe spaces to speak freely while learning that there are invisible systems that could perceive your words, thoughts, feelings, or images as inappropriate, harmful, or punishable.

#2 Method Bias: When the research instrument or method is not adjusted across cultures

Example of the Bias: Image recognition data sets that are only tested on available or the majority populations (even among disambiguated groups) whose cultural and phenotypical nuances are required to achieve access.

Example of the Discrimination: When enacted in gateway or heavily policed communities (ie. TSA at airports) it can cause unnecessary delay, annoyance, or triggering experiences regarding bodily search, removal, or restraint.

Example of the Microaggression:

When we discuss the intersectional identities of Black women, it is not enough to just consider the two major classifications within our broader society. Black women have many other very important cultural factors that are part of their identity. Having a woman, for many reasons, intentionally covering part of her body or hair or being asked to segregate herself to be searched every time she desires to do anything within our society, creates labor and inconvenience that reinforces the broader narrative that our bodies are not valued. Asking a woman to reveal even non-sexual parts of her identity is a form of structural violence against bodily agency. Covering one’s self or the wearing of spiritual or cultural items underneath one’s clothing may all be part of intentional, private, and sacred parts of our identity. They are not intended for public awareness or consumption. [Image by Mia S. Shaw Artist/Maker Educator/PhD candidate.]

#3 Item Bias: Bias in the measuring instrument and scale

Example of the Bias: The broader society agrees that certain words, phrases, or actions are harmful without recommender systems providing for contextualization.

Example of the Discrimination: Violence, hate speech, and discrimination are all issues that negatively impact Black women in our society. Not allowing Black women the means to discuss, share or build awareness of their experiences because it contains volatile subjects can further silence the oppressed.

Example of the Microaggression:

Algorithms that are designed to keep out hate speech and discourse that empowers discriminatory actions have misclassified her post sharing as pro-discriminatory or hate speech. Black women who often rely on social media to build awareness and find networks of support are now silenced when they are most vulnerable. The impact of victim shaming often means that people who are subjected to discriminatory practices or biases may not feel comfortable seeking justice. This, in turn, makes it difficult for Black women and oppressed people to speak out about their pain and experiences, which further reinforces already present narratives that make it difficult for Black women to feel like valued and protected members of our world. [Image by Mia S. Shaw Artist/Maker Educator/PhD candidate.]

#4 Disparate Treatment as a Microaggression

Example of the Bias - Automated healthcare systems utilize historical data that reflect biased and discriminatory treatment.

Example of the Discrimination - As seen in the healthcare system, the inclusion of AI systems was initially for assisting hospitals in streamlining tasks such as checking patients in, collecting data on their current health state to estimate the best caregiver, care process, or medication. However, in numerous hospitals, after a greater examination into continuous irregular operation executions, there was a marked correlation between Black patients receiving less care than their white counterparts. “People who self-identified as black were generally assigned lower risk scores than equally sick white people'' [12]. And in order for Black individuals to be treate by providers they had to be in a significantly worse state.

Example of the Microaggression:

A Black woman who is experiencing health challenges but is not being afforded full therapeutic support mechanisms may internalize social factors that deny agency and promote silencing of the pain and struggles of the oppressed. And with Black women being the most overlooked group of patients in the medical field, these restrictions become amplified. Black women are believed to exaggerate their pain levels by nurses and doctors, resulting in higher rates of maternal mortality, disparities in infertility, and various health conditions. Long-term consequences of untreated or under-addressed medical concerns can result in time off from work, job loss, comorbidities, emotional and mental challenges, and distrust of the efficacy of the medical system. [Image by Mia S. Shaw Artist/Maker Educator/PhD candidate.]

#5 Safety and Security

Personal safety and security is a topic that makes many women feel vulnerable. Black women are not exempt. As intersectionality comes into play, Black women also need to consider the impacts of recent tragedies regarding the over-policing of Black bodies by those employed to serve and protect [11]. For many, there is a fractured relationship with the police or structured security forces that cause mental and emotional anguish when we are forced to engage.

Example of the Bias - Facial recognition algorithms that are not trained on a variety of phenotypes can potentially falsely identify members of underrepresented communities.

Example of the Discrimination - Surveillance software may falsely identify someone of color as a suspect in an ongoing or past crime.

Example of the Microaggression:

Many Black women have deeply personal relationships with Black men in their families and their larger communities. The emotional burden of considering your own bodily safety, coupled with the considerations of your partners or spouses and dependents can cause undue stress in interpersonal relationships as well as in how we perceive and interact with police [17]. [Image by Mia S. Shaw Artist/Maker Educator/PhD candidate.]

Black Womanhood within the Tech Ecosystem

Black Womanhood within the Tech Ecosystem

From Bias to Discrimination

From Bias to Discrimination

Systemic Enactments of Algorithmic Discrimination

Systemic Enactments of Algorithmic Discrimination

Structural Enactments of AIgorimithic Discrimination

Structural Enactments of AIgorimithic Discrimination

Toward a Solution

Toward a Solution

Bibliography

Bibliography