In August 2020, the Mozilla Foundation released its trustworthy artificial intelligence (AI) white paper. This work applied Mozilla's theory of change to the development of AI in consumer technology, defined as "internet products and services aimed at a wide audience" [2]. While AI has showcased the ability to revolutionize every aspect of human society globally, it also presents several challenges identified by the research team. These include monopoly and centralization, data privacy and governance, bias and discrimination, accountability and transparency, industry norms, exploitation of workers and the environment, and safety and security.

AI has immense potential to improve our quality of life, but integrating complex computation into the platforms and products we use every day could compromise our security, safety, and privacy. Unless critical steps are taken to make these systems more trustworthy, the development of AI runs the risk of deepening existing power inequalities. [2]

August 2020 also marked the end of a summer of discussion and activism about race, white supremacy, and the experiences of Black people globally. The trustworthy AI white paper's discussion of AI's outsized impacts on social power, inequity, and hierarchy can be understood as acknowledging that artificial intelligence designed without trustworthy approaches can advance matrices of domination [12]. Coined by Patricia Hill Collins, this term captures how the power dynamics driving inequality are not controlled by one group but are complex, relational, and contextual. Power, one's relationship to it, as well as the ways in which it affects individuals and groups, is a shifting and intersectional [13] dynamic.

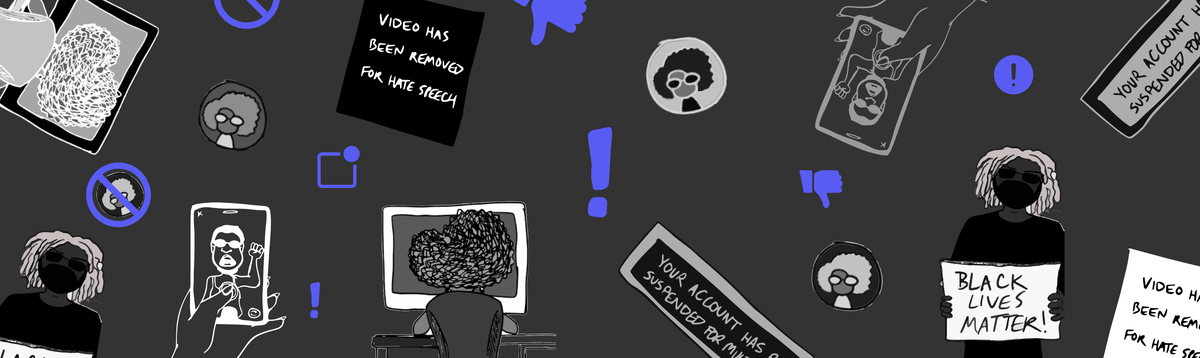

The intersection of race, gender, and technology is a cutting-edge field with groundwork laid by scholars such as Safiya Noble, Timnit Gebru, Joy Buolamwini, Ruha Benjamin, Ayanna Howard, Alondra Nelson, Rediet Abebe, Meredith Broussard, and Deborah Raji, among many others. The challenges of AI can present systemic harms for those marginalized by both race and gender, such as Black women. While the understanding that artificial intelligence is not a neutral or harmless technology has begun to permeate the mainstream, the idea that artificial intelligence can operationalize oppression at scale remains radical and misunderstood. Interpersonal and institutional bias already produces outsized power inequalities across the world. What happens when these are driven at scale by artificial intelligence? Every experience has a unique relationship to the challenges of AI, but considering Black women subsequently entails the consideration of all who simultaneously dwell on the peripheries and intersections of society.

Only a handful of tech giants have the resources to build AI, stifling innovation and competition.

Black women in America have unique experiences living among and within the matrices of domination - the interrelated structures that dominate power relations in our society - listed earlier. Coupled with tech-enabled globalization that centers the work of the US tech industries, the challenges within the US and Western social factors are also being carried forth through cultural, systemic, and institutional influences of our increasingly technologically mediated lives. In 2012, Dourish and Mainwaring presented an argument that challenged the ubiquity of the new-technology related knowledge produced most often within Western research hubs [14]. With the conversation of biases within AI systems, there is an overlap between the mistreatment and instilling of detrimental stereotypes towards Black women. Leaders of the tech industry are white cis-gendered men who operate in a “culture of [no] culture” [9] whereby the lack of experiences and diverse perspectives, ultimately produces white ideals, white philosophies, and white-centric systems as the foundation of algorithmic operations we interact with daily.

We are seeing how over 10 years later, the biases of the communities in which these technologies are developed, are being propagated throughout already marginalized communities. One of the most well-known challenges is in the area of algorithmic bias, which is rooted in and reflective of the existing biases Black women and other marginalized groups experience. The unique sociocultural perspectives of Black women make taking a lens on their experiences especially noteworthy in understanding the performance and implications of these biases to reinforce historical and existing social inequities.

Black Womanhood within the Tech Ecosystem

Black Womanhood within the Tech Ecosystem

From Bias to Discrimination

From Bias to Discrimination

Systemic Enactments of Algorithmic Discrimination

Systemic Enactments of Algorithmic Discrimination

Structural Enactments of AIgorimithic Discrimination

Structural Enactments of AIgorimithic Discrimination

Toward a Solution

Toward a Solution

Bibliography

Bibliography