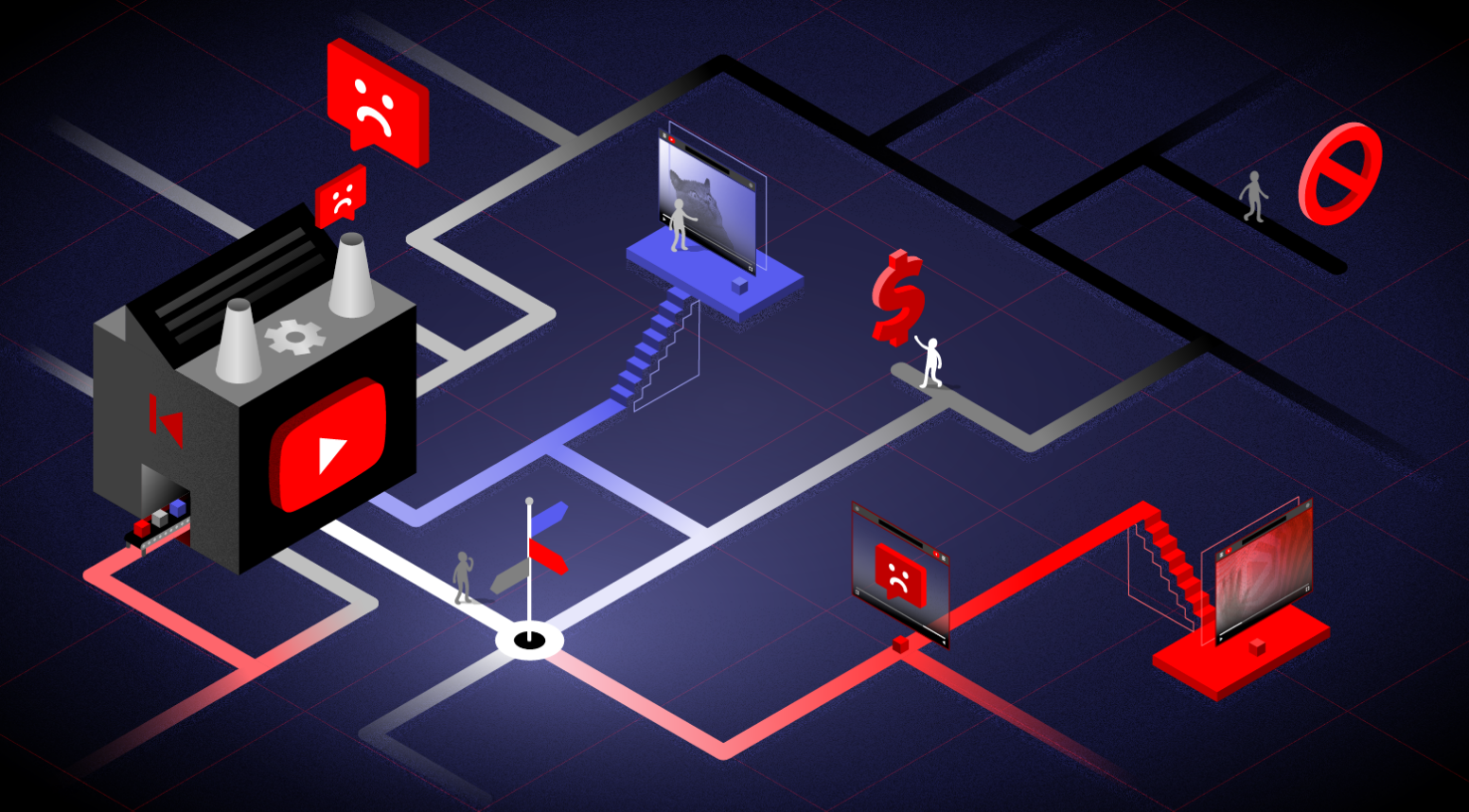

Conducted using data donated by thousands of YouTube users, research reveals the algorithm is recommending videos with misinformation, violent content, hate speech, and scams.

Research also finds that people in non-English speaking countries are far more likely to encounter disturbing videos.

(July 7, 2021) -- YouTube’s controversial algorithm is recommending videos considered disturbing and hateful that often violate the platform’s very own content policies, according to a 10-month long, crowdsourced investigation released today by Mozilla. The in-depth study also found that people in non-English speaking countries are far more likely to encounter videos they considered disturbing.

Mozilla conducted this research using RegretsReporter, an open-source browser extension that converted thousands of YouTube users into YouTube watchdogs. People voluntarily donated their data, providing researchers access to a pool of YouTube’s tightly-held recommendation data. The research is the largest-ever crowdsourced investigation into YouTube’s algorithm.

Highlights of the investigation include:

- Research volunteers encountered a range of regrettable videos, reporting everything from COVID fear-mongering to political misinformation to wildly inappropriate “children's” cartoons

- The non-English speaking world is most affected, with the rate of regrettable videos being 60% higher in countries that do not have English as a primary language

- 71% of all videos that volunteers reported as regrettable were actively recommended by YouTube’s very own algorithm

- Almost 200 videos that YouTube's algorithm recommended to volunteers have now been removed from YouTube—including several that YouTube deemed violated their own policies. These videos had a collective 160 million views before they were removed

“YouTube needs to admit their algorithm is designed in a way that harms and misinforms people.”

Brandi Geurkink, Mozilla’s Senior Manager of Advocacy

“YouTube needs to admit their algorithm is designed in a way that harms and misinforms people,” says Brandi Geurkink, Mozilla’s Senior Manager of Advocacy. “Our research confirms that YouTube not only hosts, but actively recommends videos that violate its very own policies. We also now know that people in non-English speaking countries are the most likely to bear the brunt of YouTube’s out-of-control recommendation algorithm.”

Geurkink continues: “Mozilla hopes that these findings—which are just the tip of the iceberg—will convince the public and lawmakers of the urgent need for better transparency into YouTube’s AI.”

Data collected through the study paints a vivid picture of how YouTube’s algorithm amplifies harmful, debunked, and inappropriate content: One person watched videos about the U.S. military, and was then recommended a misogynistic video titled “Man humilitates feminist in viral video.” Another person watched a video about software rights, and was then recommended a video about gun rights. And a third person watched an Art Garfunkel music video, and was then recommended a highly-sensationalised political video titled “Trump Debate Moderator EXPOSED as having Deep Democrat Ties, Media Bias Reaches BREAKING Point.”

While the research uncovered numerous examples of hate speech, debunked political and scientific misinformation, and other categories of content that would likely violate YouTube’s Community Guidelines, it also uncovered many examples that paint a more complicated picture of online harm. Many of the videos reported may fall into the category of what YouTube calls “borderline content”—videos that “skirt the borders” of their Community Guidelines without actually violating them.

Other highlights of the report include:

The algorithm is the problem

- Recommended videos were 40% times more likely to be regretted than videos searched for.

- Several Regrets recommended by YouTube’s algorithm were later taken down for violating the platform’s own Community Guidelines. Around 9% of recommended Regrets have since been removed from YouTube, but only after racking up a collective 160 million views.

- In 43.6% of cases where Mozilla had data about videos a volunteer watched before a regret, the recommendation was completely unrelated to the previous videos that the volunteer watched.

- YouTube Regrets tend to perform extremely well on the platform, with reported videos acquiring 70% more views per day than other videos watched by volunteers.

YouTube’s algorithm does not treat people equally

- The rate of YouTube Regrets is 60% higher in countries that do not have English as a primary language.

- Pandemic-related reports are particularly prolific in non-English languages. Among English-language regrets, 14% are pandemic-related. For non-English regrets, the rate is 36%.

To address the problem, Mozilla researchers recommend a range of fixes for policymakers and platforms. Says Geurkink: “Mozilla doesn’t want to just diagnose YouTube’s recommendation problem—we want to solve it. Common sense transparency laws, better oversight, and consumer pressure can all help reign in this algorithm.”

The research’s recommendations include:

- Platforms should publish frequent and thorough transparency reports that include information about their recommendation algorithms

- Platforms should provide people with the option to opt-out of personalized recommendations

- Platforms should create risk management systems devoted to recommendation AI

- Policymakers should enact laws that mandate AI system transparency and protect independent researchers

~

On methodology: Mozilla used rigorous quantitative and qualitative methods when conducting this research. Statistical methods entailed calculation of counts, proportions, and rates, while slicing by relevant variables. All differences described in this report were statistically significant at the p < 0.05 level, and all statistical significance was evaluated at this level. Mozilla also convened a working group of experts with backgrounds in online harms, freedom of expression, and tech policy to watch a sample of the reported videos, and then develop a conceptual framework for classifying them based on YouTube’s Community Guidelines. A team of 41 research assistants employed by the University of Exeter then used this conceptual framework to assess the reported videos. Learn more in the “Methodology” section of the report.

About this work: For years, YouTube has recommended health misinformation, political disinformation, hateful rants, and other regrettable content. Investigations by Mozilla, the Anti-Defamation League, the New York Times, the Washington Post, the Wall Street Journal, and several other organizations and publications have revealed how these recommendations misinform, polarize, and radicalize people. Unfortunately, YouTube has met criticism with inertia and opacity. Harmful recommendations persist on the platform, and third-party watch dogs still have no insight into how serious or frequent they are.

As part of our trustworthy AI work, Mozilla has urged YouTube for years to address this problem. We’ve provided fellowships and awards to others studying this topic; collected people’s most alarming stories; provided concrete recommendations to YouTube; hosted public discussions; and more.