YouTube Regrets

Mozilla and 37,380 YouTube users conducted a study to better understand harmful YouTube recommendations. This is what we learned.

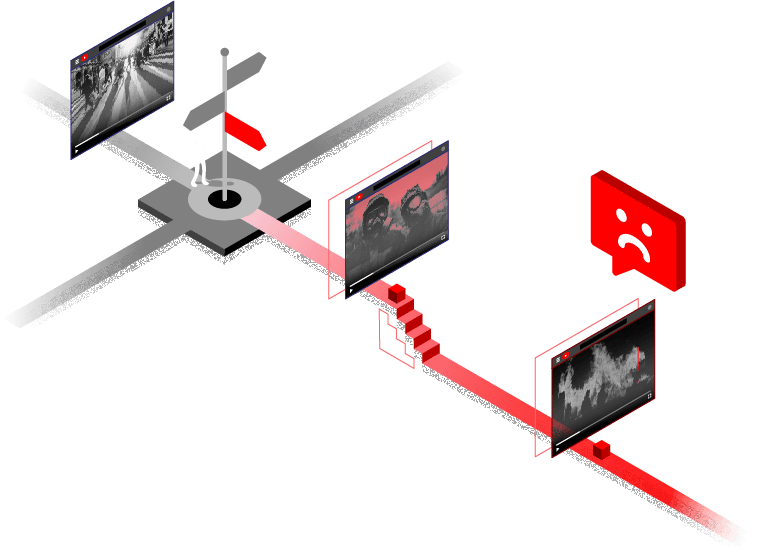

Download Full ReportIn 2019, Mozilla collected countless stories from people whose lives were impacted by YouTube’s recommendation algorithm. People had been exposed to misinformation, developed unhealthy body images, and became trapped in bizarre rabbit holes. The more stories we read, the more we realized how central YouTube has become to the lives of so many people — and just how much YouTube recommendations can impact their wellbeing.

Yet when confronted about its recommendation algorithm, YouTube’s routine response is to deny and deflect.

Faced with YouTube’s continuous refusal to engage, Mozilla built a browser extension, RegretsReporter, that allows people to donate data about the YouTube videos they regret watching on the platform. Through one of the biggest crowdsourced investigations into YouTube, we’ve uncovered new information that warrants urgent attention and action from lawmakers and the public.

We now know that YouTube is recommending videos that violate their very own content policies and harm people around the world — and that needs to stop.

What is a YouTube Regret?

The concept of a “YouTube Regret” was born out of a crowdsourced campaign that Mozilla developed in 2019 to collect stories about YouTube’s recommendation algorithm leading people down bizarre and sometimes dangerous pathways. We have intentionally steered away from strictly defining what “counts” as a YouTube Regret to allow people to define the full spectrum of regrettable experiences that they have on YouTube.

What a YouTube Regret looks like for one person may not be the same for another, and sometimes YouTube Regrets only emerge after months or weeks of being recommended videos—an experience which is better conveyed through stories than video datasets.

This approach intentionally centers the lived experiences of people to add to what is often a highly legalistic and theoretical discussion. It does not yield clear distinctions about what type of videos should or should not be on YouTube or algorithmically promoted by YouTube.

Sometimes YouTube Regrets are

We set a high bar for what videos we display prominently in our recommendations on the YouTube homepage or through the 'Up next' panel.

Youtube’s blog

The most common reported category was misinformation, especially covid-19 related.

While our research considers the broad spectrum of YouTube Regrets, it is important to note that some videos are worse than others. YouTube has Community Guidelines that set the rules for what is allowed on YouTube and what isn’t. Together with a team of research assistants from the University of Exeter, we evaluated the videos reported to us against these Community Guidelines and made our own determination of which videos should either not be on YouTube or not be recommended by YouTube. Of those videos, the most common category applicable was misinformation (especially COVID-19 related). The other largest categories were violent or graphic content, hate speech, and spam/scams. Other notable categories include child safety, harassment & cyberbullying, and animal abuse.

Types of Regretted Content

Our research is powered by real YouTube users.

Specifically: 37,380 volunteers across 190 countries installed Mozilla’s RegretsReporter browser extensions for Firefox and Chrome.

For this report, We gathered from

3,362

Reports

1,662

Volunteers

91

Countries

Reports were submitted between July 2020 - May 2021. Volunteers who downloaded the extension but did not file a report were an important part of our study. Their data — for example, how often they use YouTube — was essential to our understanding of how frequent regrettable experiences are on YouTube and how this varies between countries.

After delving into the data with a team of research assistants from the University of Exeter, Mozilla came away with three major criticisms of YouTube recommendations: from a problematic algorithm to poor corporate oversight to geographic disparities. And our past research shows that these problems can inflict lasting harm on people.

Here’s what we found

Most of the videos people regret watching come from recommendations.

YouTube Regrets are primarily a result of the recommendation algorithm, meaning videos that YouTube chooses to amplify, rather than videos that people sought out.

71% of all Regret reports came from videos recommended to our volunteers and recommended videos were 40% more likely to be regretted than videos searched for.

The YouTube Regrets our volunteers flagged had tons of views, but it’s impossible to tell how many of those views came from YouTube’s recommendation algorithm, because YouTube won’t release this information.

Reported Videos

5,794

views per day

Other Videos

3,312

views per day

What we do know is that reported videos seemed to accumulate views faster than those that were not.

At the time they were reported, YouTube Regrets had a median of 5,794 views per day they were on the platform, while other videos our volunteers watched had only 3,312 views per day.

YouTube recommends videos that violate their own policies.

Our data shows that YouTube’s algorithm recommended several videos that were later removed from the platform for violating YouTube’s own Community Guidelines.

In 40% of cases where recommended YouTube Regrets were taken down from YouTube, YouTube did not provide data about the reason why these videos were removed.

What we do know is at the time they were reported they had racked up a collective 160 million views, an average of 760 thousand views per video, accrued over an average of 5 months that the videos had been up at the time they were reported. It is impossible to tell how many of these views were a result of recommendations, because YouTube won’t release this information.

160,000,000

views over ~5 months

760

views per video

Non-English speakers are hit the hardest.

When YouTube is called out for recommending borderline content, the company usually boasts that their policy changes have led to a “70% average drop in watch time of this content coming from non-subscribed recommendations in the U.S.” But what about the rest of the world?

We found that the rate of regrets is 60% higher in countries where English is not the primary language.

This correlates with statements made by YouTube that suggest they have focused these policy changes on English-speaking countries first.

*Among countries classified as having English as a primary language, the rate is 11.0 Regrets per 10000 videos watched (95% confidence interval is 10.4 to 11.7). In countries with a non-English primary language, the rate is 60% higher at 17.5 Regrets per 10000 videos watched (95% confidence interval is 16.8 to 18.3)

36

of non-english videos were pandemic-related

14

of english videos were pandemic-related

We also found that misleading or harmful pandemic-related videos are especially prevalent among non-English regrets.

Of the YouTube Regrets that we determined shouldn’t be on YouTube or shouldn’t be recommended by YouTube, we found that only 14% of English videos were pandemic-related. But among non-English videos, the rate is 36%.

YouTube Regrets can alter people’s lives forever.

Our past work has shown that these video recommendations can have significant impacts on people’s lives.

Our recommendation: Urgent action must be taken to rein in YouTube’s algorithm.

YouTube’s algorithm drives 700 million hours of watch time on the platform every day. The consequences of this are simply too great to trust them to fix this on their own. Below, find a summary of Mozilla’s recommendations to YouTube, policymakers, and people who use YouTube. Details on these recommendations can be found in the full report.

Policymakers must require YouTube to release adequate public data about their algorithm and create research tools and legal protections that allow for real, independent scrutiny of the platform.

YouTube and other companies must publish audits of their algorithm and give people meaningful control over how their data is used for recommendations, including allowing people to opt-out of personalized recommendations.

People who use YouTube should get informed about how YouTube recommendations work and download RegretsReporter to contribute data to future crowdsourced research.

Mozilla will continue to operate RegretsReporter as an independent tool for scrutinising YouTube’s recommendation algorithm. We plan to update the extension to give people an easier way to access YouTube’s user controls to block unwanted recommendations, and will continue to publish our analysis and future recommendations.