Mozilla celebrates all the participants at Interspeech 2021, working at the forefront of groundbreaking speech technology and research.

Mozilla’s involvement in speech technology is grounded in Common Voice, which is the world’s largest open data voice dataset and designed to democratize voice technology. It is used by researchers, academics, and developers around the world.

Contributors mobilize their own communities to donate speech data to the MCV public database, which anyone can then use to train voice-enabled technology. As of the last release, the Common Voice dataset is now 13,905 hours, an increase of 4,622 hours from the previous release in January 2021.

NVIDIA has been in partnership with Mozilla since January 2021, supporting the platform and community to continue to build a bigger and better publicly available dataset as a cornerstone to unlocking the power of voice technology for all languages.

As part of NVIDIA’s collaboration with Mozilla Common Voice, the models trained on this and other public datasets are made available for free via an open-source toolkit called NVIDIA NeMo.

In this blog, our partners at Nvidia will explain how you can use NeMo to finetune an English speech recognition model on a Japanese dataset from Mozilla Common Voice!

Speech Model Support For Multiple Languages:

Languages play a crucial role in the global deployment of conversational AI models. There are around 6000 languages spoken in the world. In order for deep learning models to understand all of these languages, they need to be trained on a huge specialized language-based dataset and processed in a way that can be fed into the model for training. Getting such a high-quality dataset for training is a challenging task. By partnering with Mozilla, we accomplished the impossible by streamlining the process of obtaining any spoken language datasets from MCV and providing scripts to prepare the dataset and build a model using NeMo.

NVIDIA NeMo is an open-source toolkit for researchers developing new state-of-the-art conversational AI models. It is built on top of popular frameworks such as PyTorch and PyTorch Lightning allowing researchers to integrate their NeMo modules with PyTorch and PyTorch Lightning modules. NeMo provides a domain-specific collection of modules for building Automatic Speech Recognition (ASR), Natural Language Processing (NLP) and Text-to-Speech (TTS) models. There are several speech and language models available for free through NVIDIA NGC and are trained on multiple large open datasets for over thousands of hours on NVIDIA DGX.

NeMo modules come with a strongly typed system called Neural Types and accept typed inputs and produce typed outputs. Neural types in NeMo enable researchers to identify semantic and dimensionality errors while developing models. With the Hydra framework integration, NeMo also provides flexibility to easily configure the modules and models from a configuration file or the command line. Some of the popular conversational AI models offered by NeMo are transcription in various languages, speaker recognition and diarization, neural machine translation and speech synthesis.

Fine-tuning Speech Recognition Model Using NeMo:

Speech Recognition is the process of converting an audio input into its textual representation. NeMo makes building speech models for any language easy by starting with the pre-trained English ASR model available on NGC.

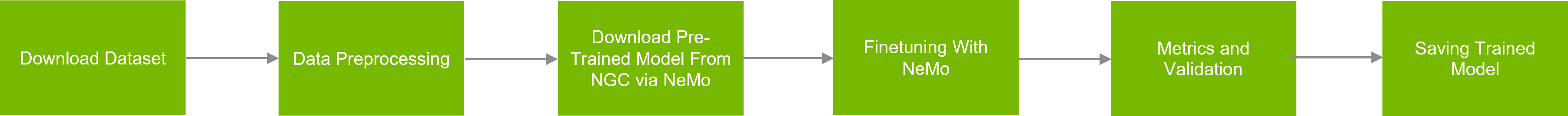

The typical workflow for training an ASR model with NeMo is shown below:

Japanese is one of the most popular and low-resource languages in the world. Developing such a Japanese speech model poses some unique challenges due to small dataset size, huge vocabulary and thousands of tokens used in daily conversation. Checkout this notebook, where we will follow the workflow from above, to solve the challenging task of creating a Japanese ASR model, by fine tuning an English ASR model on the MCV Japanese dataset with NeMo.

Conclusion:

To summarize, researchers and developers can use the MCV dataset with NeMo to accelerate the development of speech recognition models. We demonstrated in the notebook how to create a Japanese speech model from an English pretrained checkpoint.. We anticipate that you will be able to use the same technique to create models in any language of your choice.

For more information, see the following resources:

- NVIDIA NeMo GitHub

- Automatic Speech Recognition

- Speaker Recognition

- Text-to-Speech

- NeMo Pretrained Models

Authors

Nikhil Srihari

Nikhil Srihari is a deep learning software technical marketing engineer at NVIDIA. He has experience working in a wide range of deep learning and machine learning applications in natural language processing, computer vision, and speech processing. Nikhil previously worked at Fidelity Investments and Amazon. His educational background includes a Master's degree in computer science from the University at Buffalo, and a Bachelor's degree from the National Institute of Technology Karnataka, Surathkal, India.

Sirisha Rella

Sirisha Rella is a technical product marketing manager at NVIDIA focused on speech and language-based deep learning applications. Sirisha received her master’s degree in computer science from the University of Missouri-Kansas City and was a graduate research assistant at the National Science Foundation - Center for Big Learning.

Jane Scowcroft

Jane Polak Scowcroft is a product lead working on data strategy for NVIDIA’s conversational AI products with a commitment to equity and diversity through openly available technology. She previously led the Common Voice team at Mozilla and was head of product at CSIRO’s Data61. Jane holds a masters in psychology and a bachelors in computer engineering.