With the unknown possibilities of data sharing between ranging companies or organizations, the drive for accountability and transparency became alive in a sense. Every day people want to know where their private information is going and to have their voices heard by the organizations. An awareness towards clear transparency and accountability, in order to fix the “leaky pipes of corruption and inefficiency,” has grown in importance as evidence of the corruption of numerous organizations has become public news.

Using this concept’s definition, which is “an openness of the governance system through clear processes and procedures and easy access to public information for citizens [stimulating] ethical awareness in public service through information sharing”, it would seem that there are straightforward policies put in place to improve the issues that arise [3]; however, that is not the case. With organizations submitting vague statements regarding their initiatives, adjusting evidence pieces, and lacking consideration for systematic impact, all of the procedures put in place are close to irrelevant [5]. Similarly, policies that are put in place to protect against biases then become majorly overlooked since baseline issues within these organizations do not meet the standards that were initially put in place.

The emphasis on regulating the information that systems are able to obtain brings to light the problems we are facing and that we potentially could have faced prior to the implementation. A simple tweet, post, or picture can identify a person’s name, location, interest, family, and more, and despite those minor details seemingly having no impact on the individual's life, the questions of why would a company think to collect this data, how could the data be disproportionately used, and who could they potentially be sharing the information with, arises.

EXPLOITATION OF WORKERS & ENVIRONMENT

Vast amounts of computing power and human labor are used to build AI, and yet these systems remain largely invisible and are regularly exploited. The tech workers who perform the invisible maintenance of AI are particularly vulnerable to exploitation and overwork. The climate crisis is being accelerated by AI, which intensifies energy consumption and speeds up the extraction of natural resources.

Our work bias “happens automatically…by making quick judgments and assessments of people and situations, influenced by our background, cultural environment, and personal experiences” [6] and with the technical growth that we have developed, like artificial intelligence, creating narratives that pull in a certain demographic is becoming easier and easier. The idea of a simpler life is what many organizations use to romanticize the idea of urging for bias-based systems since the user will be able to find fashion, art, and daily items that attract their eye at faster rates. But with organizations “exploiting people’s private data rather than publicly available information” conversation should also come from the point of view of the cons and concerns that may arise [7].

#6 Exploitation of Environment as a Microaggression

Example of the Bias: The unfair advertising that can come out of having a device know a user more than they might know themselves creates a systematic issue, as it enhances those who are negatively impacted with policies that are already in place.

Example of Discrimination: Ads that are targeted may be chosen from predatory or very narrow views of what is desired or applicable to a user based on demographic or location-based data. In communities classified as food deserts where various resources are scarce, advertisements may lack diversity of products based on current trends that are primarily related to availability and not necessarily habitual desire.

Example of the Microaggression:

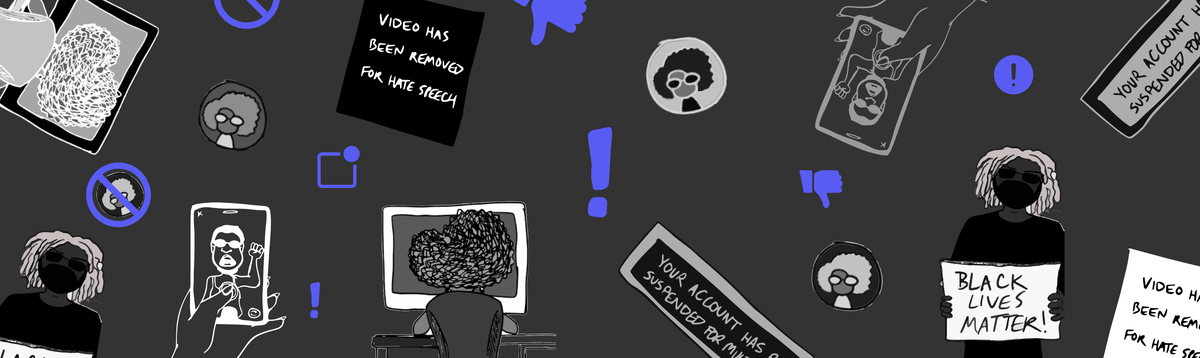

[Image by Mia S. Shaw Artist/Maker Educator/PhD candidate.]

With awareness of AI systems within their devices, the repetitive periods of when the smoker tends to backtrack on their journey and smoke a cigarette could be estimated. With this newly found information, there could be an increase of cigarette-based ads to keep the user addicted “and in need of further ‘treatments’” [7]. An organization's use of “microtargeted ads to shape consumers’ preferences and steer them into a particular consumption pattern [in turn] locking them into a lifestyle determined by their past choices and those of like minded consumers” are the kinds of issues that many align with a company's use of big data and AI systems [7].

This same restrictive loop would then cross over to racial lines, taking the cultural differences of POC and giving organizations monetary advancement over their experiences, while simultaneously keeping said groups negligible in society by pushing stereotypes as the fundamental attraction of their ads, art, or message. The increase of systematically exploited “behavioral biases of consumers, such as their inability to correctly assess the long-term effects of complex transactions or their insufficient willpower” would be unprecedented [7]. What seemed like a progressive movement to help the average person, can easily be turned into a capitalistic advancement.

#7 Exploitation of the Workforce as a Microaggression

Example of the Bias: The explosion of the technology-mediated gig workforce has created opportunities for disenfranchised workers who may require more job flexibility to work independently. Women and Black women are still earning less, citing women's reservation wage as the principal reason.

Example of the Discrimination: Black women who may not feel safe working past dark decide to stop working during peak hours thus reducing their paycheck to less than that of their male counterparts.

Example of the Microaggression:

[Image by Mia S. Shaw Artist/Maker Educator/PhD candidate.]

Since the development of rideshare and gig-based contract positions, there has been an immense push to have more employment in this sector which is accompanied by less financial security and fewer opportunities to progress to higher positions. And like most industries, there is an exacerbated impact on Black women being the worst hit. The growth of a two-tier labor market, “[a] type of payroll system in which one group of workers receive lower wages and/or employee benefits than another”, has divided the gig workers by racial groups and sex identification; again viewing Black women as the least valuable resulting in the least amount of pay even amongst this division [13]. This type of system continues a loop of working paycheck to paycheck which in turn exploits the worker because they are constantly worrying if they will be able to keep the job based on the pay. Black women’s scope of disparities in industries where exploitation comes so discreetly, will only broaden in scale and continue to fortify the employment and pay gap between Black women and any other group of people [14].

Black Womanhood within the Tech Ecosystem

Black Womanhood within the Tech Ecosystem

From Bias to Discrimination

From Bias to Discrimination

Systemic Enactments of Algorithmic Discrimination

Systemic Enactments of Algorithmic Discrimination

Structural Enactments of AIgorimithic Discrimination

Structural Enactments of AIgorimithic Discrimination

Toward a Solution

Toward a Solution

Bibliography

Bibliography