AI Myths, a project by Mozilla Fellow Daniel Leufer, dismantles harmful misconceptions about AI, from superintelligence to objectivity

“Artificial intelligence,” or “AI,” has become a popular word in the mainstream lexicon. It’s used in news headlines about everything from climate change to arms control. It’s used to hype new businesses and products. And it’s at the center of debates about public policy, social inequality, and a range of other issues.

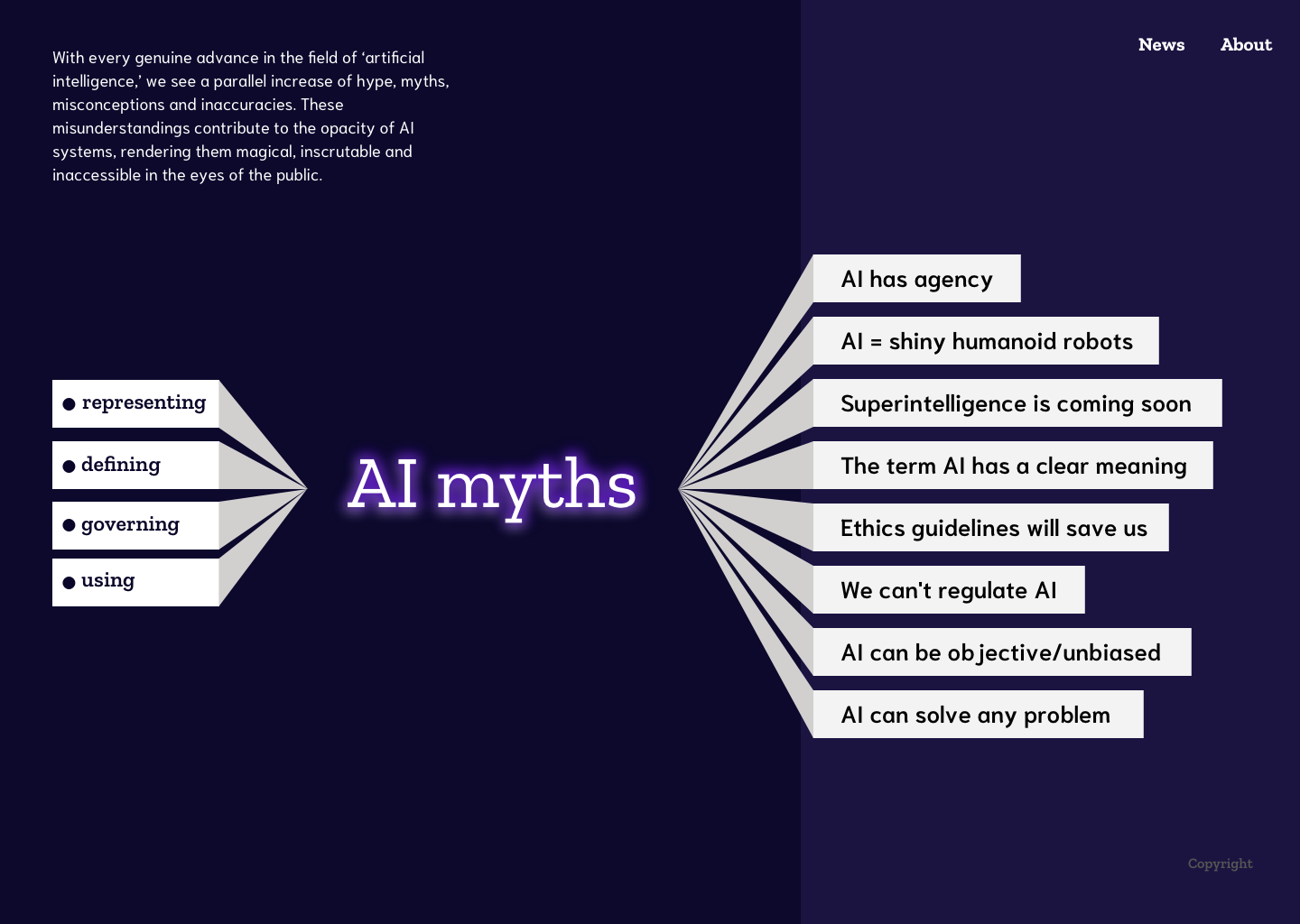

But AI’s rising popularity is coupled with ambiguity, misunderstanding, and outright fictions. What is AI, exactly? How does it work? And what is it really capable of?

Today, a new project by Mozilla Fellow Daniel Leufer identifies and debunks some of the most prevalent and harmful myths circulating about AI. AI Myths uses research, analysis, interactive experiences, and resource lists to provide readers with a clear-eyed view of what AI can — and cannot — really do.

AI Myths was conceived at the 2019 RightsCon conference, when Daniel and a group of other people working in AI policy commiserated about the rife misconceptions about their field. In the following months, Daniel corresponded with technologists, lawyers, designers, and other AI professionals around the world to crowdsource the most harmful myths about AI.

From there, Daniel catalogued the most egregious examples and gathered materials and resources to debunk them. AI Myths aims to give policymakers, activists, and everyday consumers the knowledge they need to better understand AI.

Daniel is a Brussels-based researcher and policy analyst researching how AI impacts human rights and political decision making. He has a PhD in philosophy from KU Leuven in Belgium and is a member of the Working Group in Philosophy of Technology there. As a Mozilla Fellow, he is embedded at the nonprofit Access Now, where he combats the myths and inaccuracies that obscure our understanding of AI.

Says Daniel: “AI systems are being integrated into essential public services, influential consumer technologies, and other high-risk areas. As a result, myths and misconceptions can prevent us from identifying and fixing real problems with these systems.”

"Myths and misconceptions about AI can prevent us from identifying and fixing real problems with these systems."

Daniel Leufer

Just 3 of the 8 misconceptions that “AI Myths” debunks:

AI has agency. News headline writers and tech evangelists like to suggest AI can accomplish fantastic feats all by itself: “AI is fighting climate change,” or “AI has developed a vaccine.” But the reality is that AI, or more specifically machine learning, is a tool used by people to do these things. The agency myth overstates the capabilities of AI; it diffuses accountability when AI does something harmful; and it masks the real human labor that powers the tech industry.

This section of AI Myths unpacks this myth and its consequences, and also features a news headline widget that reveals the absurdity behind the agency myth. For example, the widget rewrites the real headline “Would AI be better at governing than politicians??” as “Would unelected people who hide behind a smokescreen of technology be better at governing than people who were democratically elected?”

AI = shiny humanoid robots. One of the most prevalent confusions about AI can be found in the images accompanying news articles and policy reports. While the majority of these texts discuss machine learning software, the images tend to depict shiny humanoid robots performing bizarre activities. Not only is this representation inaccurate and frequently silly, but it comes with problematic baggage: These representations frequently feature sexualized robots that entrench gender stereotypes.

This section explores the implications of “terrible robot pictures”, and also includes an inventory of the very worst offenders.

The term AI has a clear meaning. Ask 10 people to define AI, and you’ll likely receive 10 different definitions. Is it software capable of machine learning? Software that is able to make complex decisions? Or, simply any software that analyzes data? The nebulous nature of the term leads to frequent (and sometimes willful) confusion, and to the term “AI” emerging as a new snake oil.

This section explores the origins and distortions of the term, and also discusses alternatives, such as using more precise terms like “machine learning” or “natural language processing,” or using alternative general terms like“complex information processing” or “cognitive automation.”

Read all 8 AI Myths at the full list at www.AImyths.org.

More than ever, we need a movement to ensure the internet remains a force for good. Mozilla Fellows are web activists, open-source researchers and scientists, engineers, and technology policy experts who work on the front lines of that movement. Fellows develop new thinking on how to address emerging threats and challenges facing a healthy internet. Learn more at https://foundation.mozilla.org/fellowships/.