A series by Mozilla examining how major platforms are responding to COVID-19 misinformation

While much of the world is practicing social distancing, Facebook is bringing us together while we are physically apart. It’s also a place where people are sharing and receiving news about the pandemic, so we were happy to see that last week, Facebook joined other technology companies in pledging to combat COVID-19 misinformation. It’s good news that Facebook and others are taking the pandemic seriously, because misinformation about coronavirus can encourage people to disregard medical advice, risk their lives, endanger others, or panic.

So far, Facebook’s efforts to limit misinformation include pushing out more accurate information, prohibiting exploitative tactics in ads, fact checking content, and removing some content. But how well is it working?

Providing accurate information

New educational pop-ups at the top of coronavirus-related searches link to expert health organizations like the World Health Organization (WHO). Facebook has also given the WHO unlimited free Facebook advertising.

In addition, Facebook has given more local governments, state and local emergency response organizations, and law enforcement agencies the ability to push out information to their local communities through their local alerts tool, which appears at the top of Facebook newsfeeds.

Is it working?

Educational pop-ups and local alerts appear to be a positive intervention. While it’s unclear if this is helping prevent the spread of misinformation shared by users and pages, Facebook uses a similar strategy to combat vaccine misinformation, which has been welcomed by the WHO.

Prohibiting exploitative tactics in ads

Facebook has banned advertising that makes false claims about coronavirus or promotes panic, and added medical face masks to the list of forbidden products on March 6. While Facebook traditionally relies on both humans and technology to review ads, because human moderators have been sent home, the platform is now relying heavily on technology to review advertising.

Is it working?

Facebook still faces challenges when ensuring ads meet guidelines. While health workers in many countries are complaining of shortages of basic protective gear like facemasks, co-founder of Who Targets Me? Sam Jeffers was still seeing advertisements for facemasks days after they were added to the ban. Quartz reported the same issue, and noted that sellers were also engaging in price gouging.

Fact checking and removal

Facebook is using an existing global network of third-party fact checkers and AI to review content and debunk false claims related to the virus, both on Facebook and Instagram. When content is rated as false, Facebook limits its spread and shows people accurate information from trusted health partners. Facebook then sends notifications to users who previously shared that content, or who are trying to share it, alerting them that the information has been found to be false. Facebook will also remove content with false claims or conspiracy theories if it has been flagged by leading global health organizations and local health authorities as harmful to people who believe it.

Is it working?

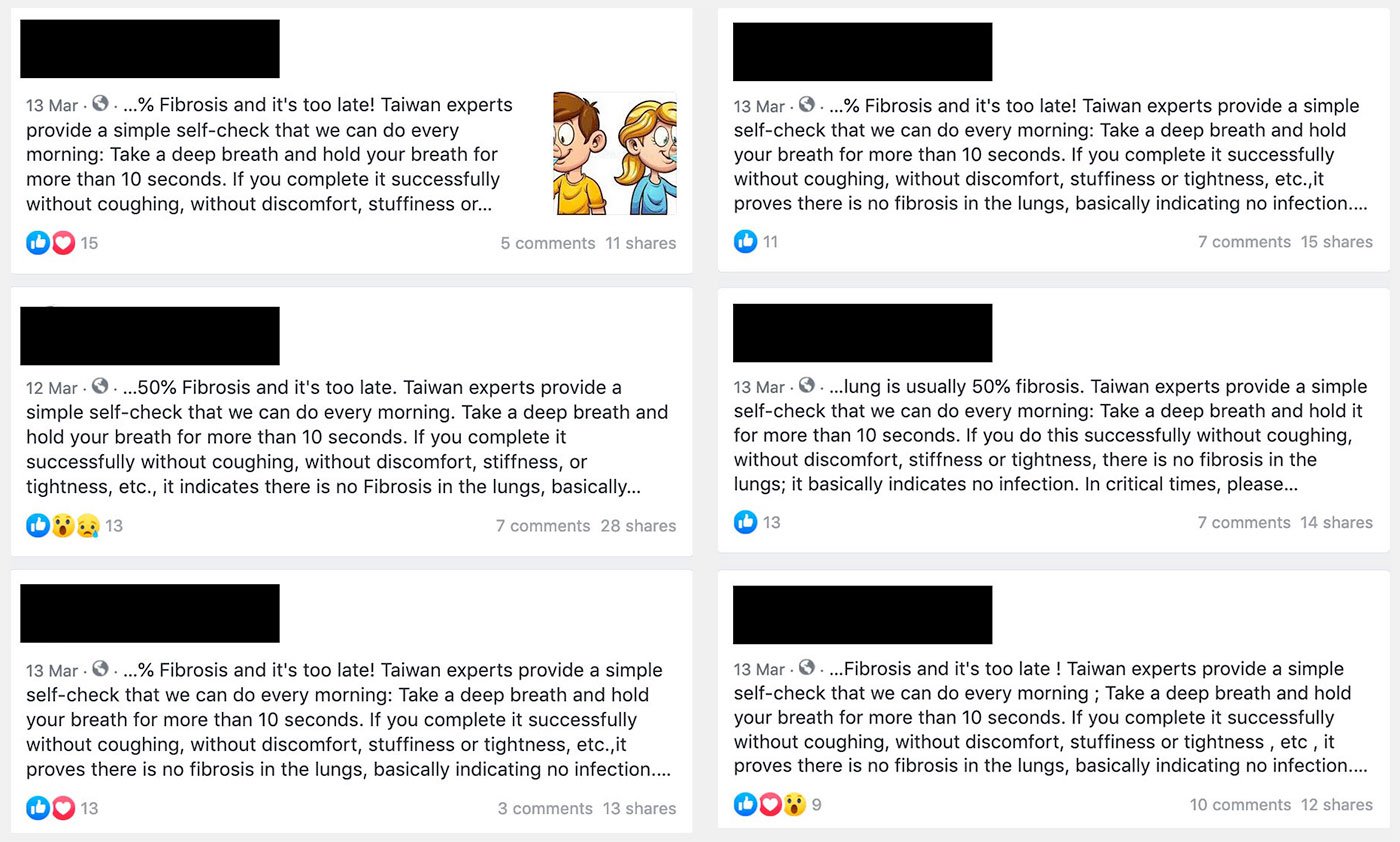

Facebook’s fact checking and removal procedure hasn’t prevented at least one very inaccurate post from going viral. Among other things, this dangerously wrong post claims that you can test for coronavirus by holding your breath, and that the virus will die in temperatures above 80°F (27°C).

A week after this inaccurate post was reported, a simple search reveals iterations are still making the rounds. When I clicked Facebook’s share button on one of the posts, I was not alerted that it was misinformation, as Facebook suggested I should.

And, while Mark Zuckerberg has touted AI as the solution to Facebook’s most vexing problems, the AI component of Facebook’s moderation and removal process is known to be error prone. Recently, this resulted in real coronavirus news being blocked by the platform, falsely flagged, and removed as spam. Facebook has acknowledged that because human moderators are being sent home to prevent the spread of the virus, it expects more errors to be made.

Conclusion

Facebook is not the only platform struggling to combat misinformation. This is just one example of an ongoing problem all social media platforms struggle with, especially in times like these.

We think Facebook made the right call sending staff home, and sympathize with their need to rely more heavily on AI. While we’ve been critical of Facebook’s actions in the past, we’re encouraged by their efforts to limit the spread of misinformation now, and we’re rooting for them to find better ways to stop the spread of misinformation and exploitative advertising.

Meanwhile, here’s what you can do to help the cause of halting the spread of disinformation:

- Think before you share. This is the most critical piece of advice. Don’t share health information that isn’t from a reputable source like the CDC, WHO, or the NHS.

- Fact Check. If you see someone else share information that’s false, share a link to accurate information from a trusted source.

- Report. Facebook, and many other platforms, have means for you to report misinformation. On Facebook, this feature is found in the top-right corner of a post. Click or tap “...” and select “find support or report post,” then select the problem. Typically for misinformation, it will be “false news.”

Up next, we’ll be looking at what Twitter has implemented to stop the spread of coronavirus information. Stay tuned.